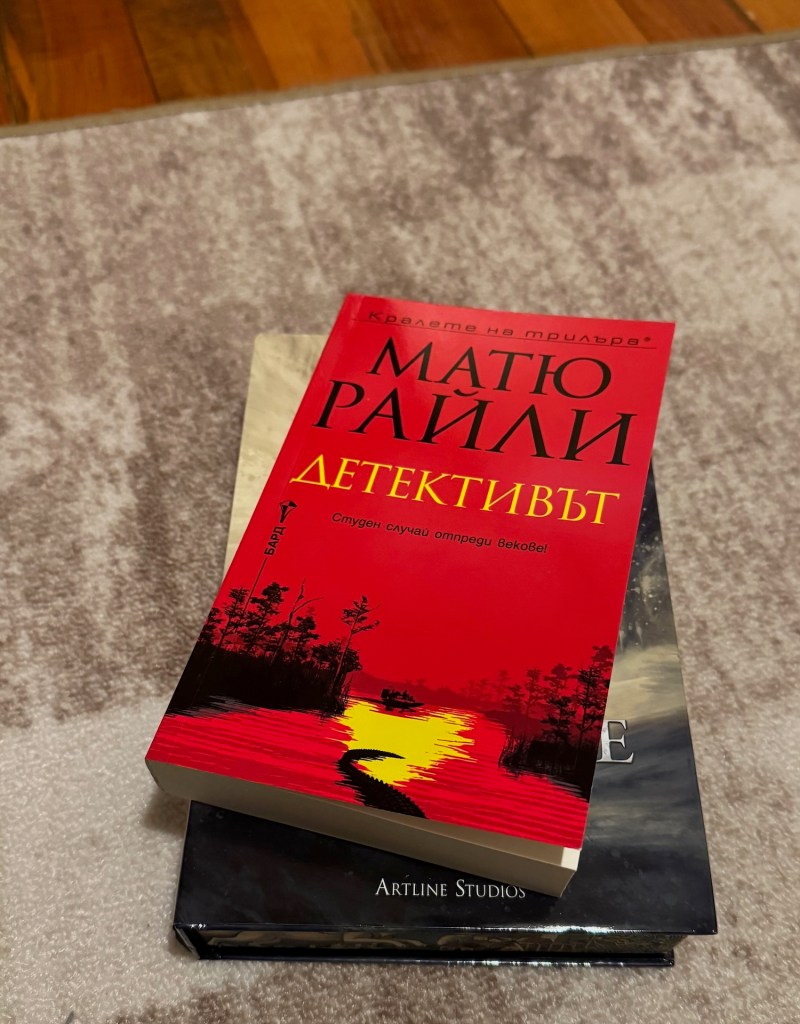

A private investigator reopens a cold case involving a serial killer who has been abducting victims for over 150 years.

I’m a fan of Matthew Reilly and his wildly unrealistic thrillers. Based on the description of this one, I was expecting at the very least aliens. It turned out to be far less extraordinary than that, but still very much in the right style and grandiosity. An easy, fast read. 5/5, although you should not expect depth of characters or anything like that. Despite the heavy topic, it’s mostly a bubble gum thriller.

The book below is The Hunger of the Gods, for scale and color.