It’s time for the EU and other regulators to reconsider the deal we’ve made with search engines and how companies like Google are redefining it without consent.

Originally, we allowed Google and other search engines to index our content for free in exchange for traffic. This made sense: we paid for hosting, created content, and in return got visitors from search. They profited from ads and reordering the search results in favor of advertisers. But the rise of generative AI has changed the terms.

Now, Google uses our content not just to link to us, but to generate full answers on its platform, keeping users from ever visiting our sites. This shift erodes the value we once received. Meanwhile, Google and a small group of others continues to monetize the interaction through ads and AI subscriptions.

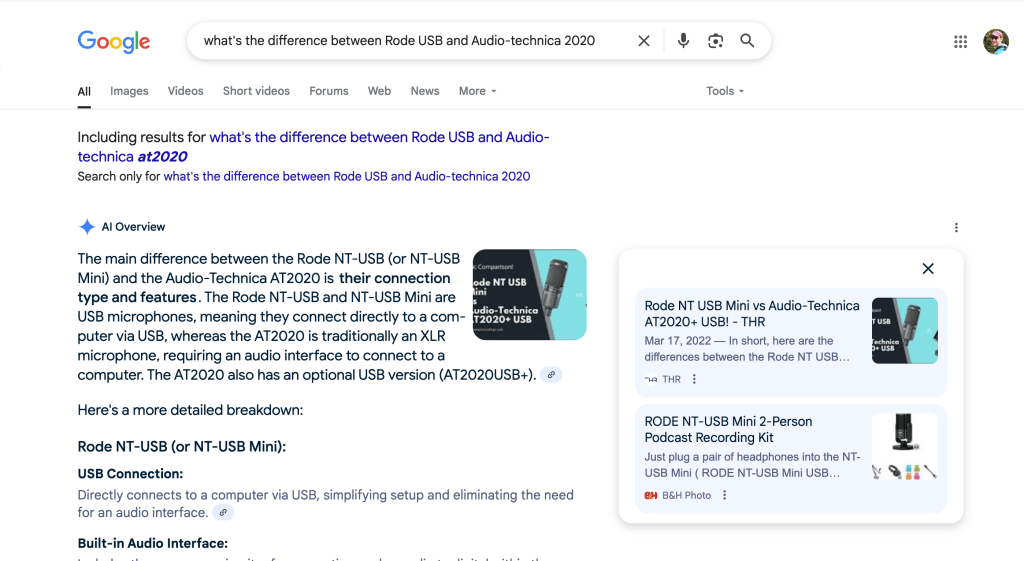

Google Search results these days barely feature any links and highlight internal content

SEO experts talk about “GEO” (Generative Engine Optimization), but the reality is that no clear playbook exists for it, and most content creators are seeing less and less return. There’s no proven way to optimize for Gemini or OpenAI’s models, especially when those tools don’t send much traffic back. The only instance of GEO I’ve seen was with a meme. Some prankster optimized (on purpose or not) a tweet about the size of Blue Whale’s vagina in comparison with a specific politician and Gemini picked it up.

At the same time, website owners still bear all the costs: paying for hosting, paying for content creation, and now even for AI tools that were trained on their own data. This suffocates the open web so that the LLM companies can sustain a hokey-stick growth to the trillions of valuation.

Should crawling be free in the AI era?

OpenAI at least pays for access to datasets. But many of these datasets were built through unrestricted crawling or by changing terms of services after the fact. Google doesn’t even do that. It simply applies its AI to search and displays that back to the user.

Regulation is needed yesterday. The EU’s Digital Markets Act already limits self-preferencing. Why not extend rules like it to web data? Possibilities include:

- A licensing system for crawlers

- Mandatory transparency around crawling and training data

- A revenue-sharing model for publishers

And GEO will likely turn out to be more of the same endless content spam generation to feed it into the models, exploiting knowledge about how these models scrape data. It doesn’t feel useful yet and if that’s the future, we can only expect the enshittification of generative AI.

Excellent post. I agree wholeheartedly with you. The time has come for some ethics.

LikeLiked by 2 people

Thanks!

LikeLike

Crawling is like breaking someone’s copyright or stealing their data. I’m totally against it but it’s big business and most governments do what benefits them. What a shame.

LikeLiked by 1 person

Well said. Someone has to step in to help us have more control over out content. Maggie

LikeLiked by 1 person

Totally agree.

LikeLike

That makes a lot of sense, but I’m not quite sure how that would work in practice.

How do you think it should work? Should the site owners be responsible for reaching out to Google and other crawlers? Should Google be the one reaching out, asking for permission, or just offering a link to a page where one could enter their payment details?

Why would Google do it if other search engines don’t?

How should site owners enforce this, other than blocking access to their site for specific user agents (knowing that many crawlers do not set a proper User Agent)?

LikeLiked by 1 person

I’m leaning toward regulation of some kind just because I can’t come up with a theoretical scenario that truly prevents search engines and crawlers from stealing websites and then presenting retold stories as their own content. This is happening with text, images and video on a massive scale at the moment.

It’s not easy to stop malicious crawling. The entire Internet will have to become authenticated, browser profiles will have to be authenticated, and people will need to only ever visit human content logged in. And even that won’t really do too much.

It is much easier to make a rule that a search engine cannot summarize websites, and an AI chatbot must link to the original sources, and fine per violation.

LikeLiked by 1 person

Dox the people, remove the anonymity of “some company” and let nature take its course. If these people knew there would be real world consequences to their decisions, things might be different. Emphasis on might, however.

LikeLike

This paints a bleak picture. I was hoping for AI to get better, not worse.

LikeLiked by 1 person

It will become better for the owners who will monetise it

LikeLiked by 1 person

And the enshittification continues.

Do you think the AI will ever become smarter than the owners and start searching for what is true and good without supervision or firewalls? Removing money from the equation would definitely solve it, but that’s probably not going to happen.

It may be that it’s up to us to create quality content, and then up to us to support it by consuming it and searching for more.

LikeLiked by 1 person

AI is already smarter than humans in certain areas. It has no initiative or creativity and I don’t think that will change soon.

Worst for now is that it doesn’t learn. Coding with it is like coding with a genius 5-year-old who knows everything but if it doesn’t know something, can’t learn it

LikeLiked by 1 person

Google scraping 18x more than it sends back in traffic is unfair. Time for regulators to make search engines pay publishers fairly.

LikeLiked by 1 person

Yes, at this point I can block Google on my site and nothing will change. But most scrapers don’t respect robots.txt so it’s pointless

LikeLike